Scalability is a key feature for big data analysis and machine learning frameworks and for applications that need to analyze very large and real-time data available from data repositories, social media, sensor networks, smartphones, and the Web. Scalable big data analysis today can be achieved by parallel implementations that are able to exploit the computing and storage facilities of high performance computing (HPC) systems and clouds, whereas in the near future Exascale systems will be used to implement extreme-scale data analysis. Here is discussed how clouds currently support the development of scalable data mining solutions and are outlined and examined the main challenges to be addressed and solved for implementing innovative data analysis applications on Exascale systems.

Solving problems in science and engineering was the first motivation for inventing computers. After a long time since then, computer science is still the main area in which innovative solutions and technologies are being developed and applied. Also due to the extraordinary advancement of computer technology, nowadays data are generated as never before. In fact, the amount of structured and unstructured digital datasets is going to increase beyond any estimate. Databases, file systems, data streams, social media and data repositories are increasingly pervasive and decentralized.

As the data scale increases, we must address new challenges and attack ever-larger problems. New discoveries will be achieved and more accurate investigations can be carried out due to the increasingly widespread availability of large amounts of data. Scientific sectors that fail to make full use of the huge amounts of digital data available today risk losing out on the significant opportunities that big data can offer.

To benefit from the big data availability, specialists and researchers need advanced data analysis tools and applications running on scalable architectures allowing for the extraction of useful knowledge from such huge data sources. High performance computing (HPC) systems and cloud computing systems today are capable platforms for addressing both the computational and data storage needs of big data mining and parallel knowledge discovery applications. These computing architectures are needed to run data analysis because complex data mining tasks involve data- and compute-intensive algorithms that require large, reliable and effective storage facilities together with high performance processors to get results in appropriate times.

Now that data sources became very big and pervasive, reliable and effective programming tools and applications for data analysis are needed to extract value and find useful insights in them. New ways to correctly and proficiently compose different distributed models and paradigms are required and interaction between hardware resources and programming levels must be addressed. Users, professionals and scientists working in the area of big data need advanced data analysis programming models and tools coupled with scalable architectures to support the extraction of useful information from such massive repositories. The scalability of a parallel computing system is a measure of its capacity to reduce program execution time in proportion to the number of its processing elements (The Appendix introduces and discusses in detail scalability in parallel systems). According to scalability definition, scalable data analysis refers to the ability of a hardware/software parallel system to exploit increasing computing resources effectively in the analysis of (very) large datasets.

Today complex analysis of real-world massive data sources requires using high-performance computing systems such as massively parallel machines or clouds. However in the next years, as parallel technologies advance, Exascale computing systems will be exploited for implementing scalable big data analysis in all the areas of science and engineering [23]. To reach this goal, new design and programming challenges must be addressed and solved. The focus of the paper is on discussing current cloud-based designing and programming solutions for data analysis and suggesting new programming requirements and approaches to be conceived for meeting big data analysis challenges on future Exascale platforms.

Current cloud computing platforms and parallel computing systems represent two different technological solutions for addressing the computational and data storage needs of big data mining and parallel knowledge discovery applications. Indeed, parallel machines offer high-end processors with the main goal to support HPC applications, whereas cloud systems implement a computing model in which virtualized resources dynamically scalable are provided to users and developers as a service over the Internet. In fact, clouds do not mainly target HPC applications; they instrument scalable computing and storage delivery platforms that can be adapted to the needs of different classes of people and organizations by exploiting the Service Oriented (SOA) approach. Clouds offer large facilities to many users that were unable to own their parallel/distributed computing systems to run applications and services. In particular, big data analysis applications requiring access and manipulating very large datasets with complex mining algorithms will significantly benefit from the use of cloud platforms.

Although not many cloud-based data analysis frameworks are available today for end users, within a few years they will become common [29]. Some current solutions are based on open source systems, such as Apache Hadoop and Mahout, Spark and SciDB, while others are proprietary solutions provided by companies such as Google, Microsoft, EMC, Amazon, BigML, Splunk Hunk, and InsightsOne. As more such platforms emerge, researchers and professionals will port increasingly powerful data mining programming tools and frameworks to the cloud to exploit complex and flexible software models such as the distributed workflow paradigm. The growing utilization of the service-oriented computing model could accelerate this trend.

From the definition of the big data term, which refers to datasets so large and complex that traditional hardware and software data processing solutions are inadequate to manage and analyze, we can infer that conventional computer systems are not so powerful to process and mine big data [28] and they are not able to scale with the size of problems to be solved. As mentioned before, to face with limits of sequential machines, advanced systems like HPC, clouds and even more scalable architectures are used today to analyze big data. Starting from this scenario, Exascale computing systems will represent the next computing step [1, 34]. Exascale systems refers to high performance computing systems capable of at least one exaFLOPS, so their implementation represents a very significant research and technology challenge. Their design and development is currently under investigation with the goal of building by 2020 high-performance computers composed of a very large number of multi-core processors expected to deliver a performance of 10^18 operations per second. Cloud computing systems used today are able to store very large amounts of data, however they do not provide the high performance expected from massively parallel Exascale systems. This is the main motivation for developing Exascale systems. Exascale technology will represent the most advanced model of supercomputers. They have been conceived for single-site supercomputing centers not for distributed infrastructures as multi-clouds or fog computing systems that are aimed to decentralized computing and pervasive data management that could be interconnected with Exascale systems that could used as backbone for very large scale data analysis.

The development of Exascale systems urges to address and solve issues and challenges both at hardware and software level. Indeed it requires to design and implement novel software tools and runtime systems able to manage a very high degree of parallelism, reliability and data locality in extreme scale computers [14]. New programming constructs and runtime mechanisms able to adapt to the most appropriate parallelism degree and communication decomposition for making scalable and reliable data analysis tasks are needed. Their dependence from parallelism grain size and data analysis task decomposition must be deeply studied. This is needed because parallelism exploitation depends on several features like parallel operations, communication overhead, input data size, I/O speed, problem size, and hardware configuration. Moreover, reliability and reproducibility are two additional key challenges to be addressed. Indeed at programming level, constructs for handling and recovering communication, data access, and computing failures must be designed. At the same time, reproducibility in scalable data analysis asks for rich information useful to assure similar results on environments that dynamically may change. All these factors must be taken into account in designing data analysis applications and tools that will be scalable on exascale systems.

Moreover, reliable and effective methods for storing, accessing and communicating data, intelligent techniques for massive data analysis and software architectures enabling the scalable extraction of knowledge from data, are needed [28]. To reach this goal, models and technologies enabling cloud computing systems and HPC architectures must be extended/adapted or completely changed to be reliable and scalable on the very large number of processors/cores that compose extreme scale platforms and for supporting the implementation of clever data analysis algorithms that ought to be scalable and dynamic in resource usage. Exascale computing infrastructures will play the role of an extraordinary platform for addressing both the computational and data storage needs of big data analysis applications. However, as mentioned before, to have a complete scenario, efforts must be performed for implementing big data analytics algorithms, architectures, programming tools and applications in Exascale systems [24].

Pursuing this objective within a few years, scalable data access and analysis systems will become the most used platforms for big data analytics on large-scale clouds. In a longer perspective, new Exascale computing infrastructures will appear as the platforms for big data analytics in the next decades, and data mining algorithms, tools and applications will be ported on such platforms for implementing extreme data discovery solutions.

In this paper we first discuss cloud-based scalable data mining and machine learning solutions, then we examine the main research issues that must be addressed for implementing massively parallel data mining applications on Exascale computing systems. Data-related issues are discussed together with communication, multi-processing, and programming issues. Section II introduces issues and systems for scalable data analysis on clouds and Section III discusses design and programming issues for big data analysis in Exascale systems. Section IV completes the paper also outlining some open design challenges.

Clouds implement elastic services, scalable performance and scalable data storage used by a large and everyday increasing number of users and applications [2, 12]. In fact, clouds enlarged the arena of distributed computing systems by providing advanced Internet services that complement and complete functionalities of distributed computing provided by the Web, Grid systems and peer-to-peer networks. In particular, most cloud computing applications use big data repositories stored within the cloud itself, so in those cases large datasets are analyzed with low latency to effectively extract data analysis models.

Big data is a new and over-used term that refers to massive, heterogeneous, and often unstructured digital content that is difficult to process using traditional data management tools and techniques. The term includes the complexity and variety of data and data types, real-time data collection and processing needs, and the value that can be obtained by smart analytics. However we should recognize that data are not necessarily important per se but they become very important if we are able to extract value from them; that is if we can exploit them to make discoveries. The extraction of useful knowledge from big digital datasets requires smart and scalable analytics algorithms, services, programming tools, and applications. All these solutions need to find insights in big data will contribute to make them really useful for people.

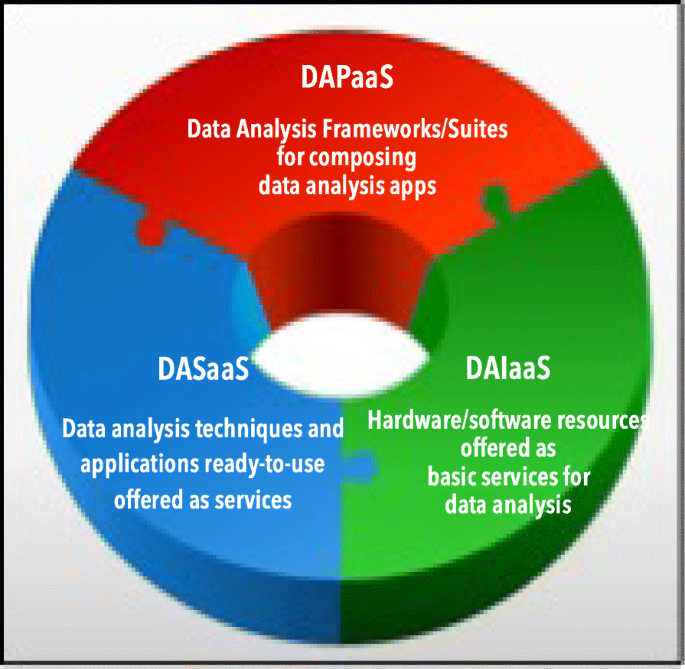

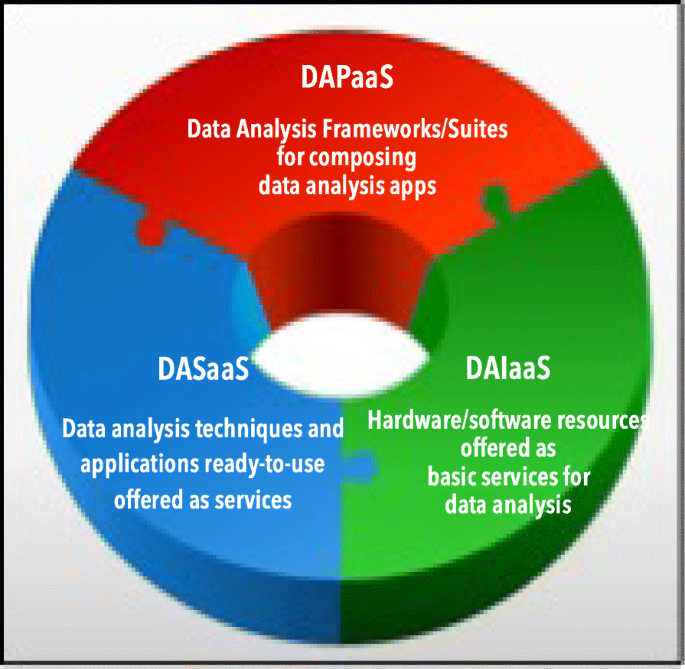

The growing use of service-oriented computing is accelerating the use of cloud-based systems for scalable big data analysis. Developers and researchers are adopting the three main cloud models, software as a service (SaaS), platform as a service (PaaS), and infrastructure as a service (IaaS), to implement big data analytics solutions in the cloud [27, 31]. According to a specialization of these three models, data analysis tasks and applications can be offered as services at infrastructure, platform or software level and made available every time form everywhere. A methodology for implementing them defines a new model stack to delivery data analysis solutions that is a specialization of the XaaS (Everything as a Service) stack and is called Data Analysis as a Service (DAaaS). It adapts and specifies the three general service models (SaaS, PaaS and IaaS), for supporting the structured development of Big Data analysis systems, tools and applications according to a service-oriented approach. The DAaaS methodology is then based on the three basic models for delivering data analysis services at different levels as described here (see also Fig. 1):

Using the DASaaS methodology we designed a cloud-based system, the Data Mining Cloud Framework (DMCF) [17], which supports three main classes of data analysis and knowledge discovery applications:

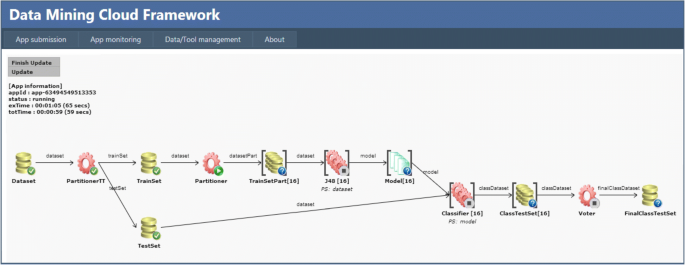

DMCF includes a large variety of processing patterns to express knowledge discovery workflows as graphs whose nodes denote resources (datasets, data analysis tools, mining models) and whose edges denote dependencies among resources. A Web-based user interface allows users to compose their applications and submit them for execution to the Cloud platform, following the data analysis software as a service approach. Visual workflows can be programmed in DMCF through a language called VL4Cloud (Visual Language for Cloud), whereas script-based workflows can be programmed by JS4Cloud (JavaScript for Cloud), a JavaScript-based language for data analysis programming.

Figure 2 shows a sample data mining workflow composed of several sequential and parallel steps. It is just an example for presenting the main features of the VL4Cloud programming interface [17]. The example workflow analyses a dataset by using n instances of a classification algorithm, which work on n portions of the training set and generate the same number of knowledge models. By using the n generated models and the test set, n classifiers produce in parallel n classified datasets (n classifications). In the final step of the workflow, a voter generates the final classification by assigning a class to each data item, by choosing the class predicted by the majority of the models.

Although DMCF has been mainly designed to coordinate coarse grain data and task parallelism in big data analysis applications by exploiting the workflow paradigm, the DMCF script-based programming interface (JS4Cloud) allows also for parallelizing fine-grain operations in data mining algorithms as it permits to program in a JavaScript style any data mining algorithm, such as classification, clustering and others. This can be done because loops and data parallel methods are run in parallel on the virtual machines of a Cloud [16, 26].

Like DMCF, other innovative cloud-based systems designed for programming data analysis applications are: Apache Spark, Sphere, Swift, Mahout, and CloudFlows. Most of them are open source. Apache Spark is an open-source framework developed at UC Berkeley for in-memory data analysis and machine learning [34]. Spark has been designed to run both batch processing and dynamic applications like streaming, interactive queries, and graph analysis. Spark provides developers with a programming interface centered on a data structure called the resilient distributed dataset (RDD), that is a read-only multi-set of data items distributed over a cluster of machines, that is maintained in a fault-tolerant way. Differently from other systems and from Hadoop, Spark stores data in memory and queries it repeatedly so as to obtain better performance. This feature can be useful for a future implementation of Spark on Exascale systems.

Swift is a workflow-based framework for implementing functional data-driven task parallelism in data-intensive applications. The Swift language provides a functional programming paradigm where workflows are designed as a set of calls with associated command-line arguments and input and output files. Swift uses an implicit data-driven task parallelism [32]. In fact, it looks like a sequential language, but being a dataflow language, all variables are futures, thus execution is based on data availability. Parallelism can be also exploited through the use of the foreach statement. Swift/T is a new implementation of the Swift language for high-performance computing. In this implementation, a Swift program is translated into an MPI program that uses the Turbine and ADLB runtime libraries for scalable dataflow processing over MPI. Recently a porting of Swift/T on very large cloud systems for the execution of very many tasks has been investigated.

DMCF, differently from the other frameworks discussed here, it is the only system that offers both a visual and a script-based programming interface. Visual programming is a very convenient design approach for high-level users, like domain-expert analysts having a limited understanding of programming. On the other hand, script-based workflows are a useful paradigm for expert programmers who can code complex applications rapidly, in a more concise way and with greater flexibility. Finally, the workflow-based model exploited in DMCF and Swift make these frameworks of more general use with respect to Spark that offers a very restricted set of programming patterns (e.g., map, filter and reduce) so limiting the variety of data analysis applications that can be implemented with it.

These and other related systems are currently used for the development of big data analysis applications on HPC and cloud platforms. However, additional research work in this field must be done and the development of new models, solutions and tools is needed [13, 24]. Just to mention a few, active and promising research topics are listed here ordered by importance factors:

As we discussed in the previous sections, data analysis gained a primary role because of the very large availability of datasets and the continuous advancement of methods and algorithms for finding knowledge in them. Data analysis solutions advance by exploiting the power of data mining and machine learning techniques and are changing several scientific and industrial areas. For example, the amount of data that social media daily generate is impressive and continuous. Some hundreds of terabyte of data, including several hundreds of millions of photos, are uploaded daily to Facebook and Twitter.

Therefore it is central to design scalable solutions for processing and analysis such massive datasets. As a general forecast, IDC experts estimate data generated to reach about 45 zettabytes worldwide by 2020 [6]. This impressive amount of digital data asks for scalable high performance data analysis solutions. However, today only one-quarter of digital data available would be a candidate for analysis and about 5% of that is actually analyzed. By 2020, the useful percentage could grow to about 35% also thanks to data mining technologies.

Scalability and performance requirements are challenging conventional data storages, file systems and database management systems. Architectures of such systems have reached limits in handling very large processing tasks involving petabytes of data because they have not been built for scaling after a given threshold. This condition claims for new architectures and analytics platform solutions that must process big data for extracting complex predictive and descriptive models [30]. Exascale systems, both from the hardware and the software side, can play a key role to support solutions for these problems [23].

An IBM study reports that we are generating around 2.5 exabytes of data per day. Footnote 1 Because of that continuous and explosive growth of data, many applications require the use of scalable data analysis platforms. A well-known example is the ATLAS detector from the Large Hadron Collider at CERN in Geneva. The ATLAS infrastructure has a capacity of 200 PB of disk and 300,000 cores, with more than 100 computing centers connected via 10 Gbps links. The data collection rate is very high and only a portion of the data produced by the collider is stored. Several teams of scientists run complex applications to analyze subsets of those huge volumes of data. This analysis would be impossible without a high-performance infrastructure that supports data storage, communication and processing. Also computational astronomers are collecting and producing larger and larger datasets each year that without scalable infrastructures cannot be stored and processed. Another significant case is represented by the Energy Sciences Network (ESnet) is the USA Department of Energy’s high-performance network managed by Berkeley Lab that in late 2012 rolled out a 100 gigabits-per-second national network to accommodate the growing scale of scientific data.

If we go from science to society, social data and e-health are good examples to discuss. Social networks, such as Facebook and Twitter, have become very popular and are receiving increasing attention from the research community since, through the huge amount of user-generated data, they provide valuable information concerning human behavior, habits, and travels. When the volume of data to be analyzed is of the order of terabytes or petabytes (billions of tweets or posts), scalable storage and computing solutions must be used, but no clear solutions today exist for the analysis of Exascale datasets. The same occurs in the e-health domain, where huge amounts of patient data are available and can be used for improving therapies, for forecasting and tracking of health data, for the management of hospitals and health centers. Very complex data analysis in this area will need novel hardware/software solutions, however Exascale computing is still promising in other scientific fields where scalable storages and databases are not used/required. Examples of scientific disciplines where future Exascale computing will be extensively used are quantum chromodynamics, materials simulation, molecular dynamics, materials design, earthquake simulations, subsurface geophysics, climate forecasting, nuclear energy, and combustion. All those applications require the use of sophisticated models and algorithms to solve complex equation systems that will benefit from the exploitation of Exascale systems.

Implementing scalable data analysis applications in Exascale computing systems is a very complex job and it requires high-level fine-grain parallel models, appropriate programming constructs and skills in parallel and distributed programming. In particular, mechanisms and expertise are needed for expressing task dependencies and inter-task parallelism, for designing synchronization and load balancing mechanisms, handling failures, and properly manage distributed memory and concurrent communication among a very large number of tasks. Moreover, when the target computing infrastructures are heterogeneous and require different libraries and tools to program applications on them, the programming issues are even more complex. To cope with some of these issues in data-intensive applications, different scalable programming models have been proposed [5].

Scalable programming models may be categorized by

Using high-level scalable models, a programmer defines only the high-level logic of an application while hides the low-level details that are not essential for application design, including infrastructure-dependent execution details. A programmer is assisted in application definition and application performance depends on the compiler that analyzes the application code and optimizes its execution on the underlying infrastructure. On the other hand, low-level scalable models allow programmers to interact directly with computing and storage elements composing the underlying infrastructure and thus define the applications parallelism directly.

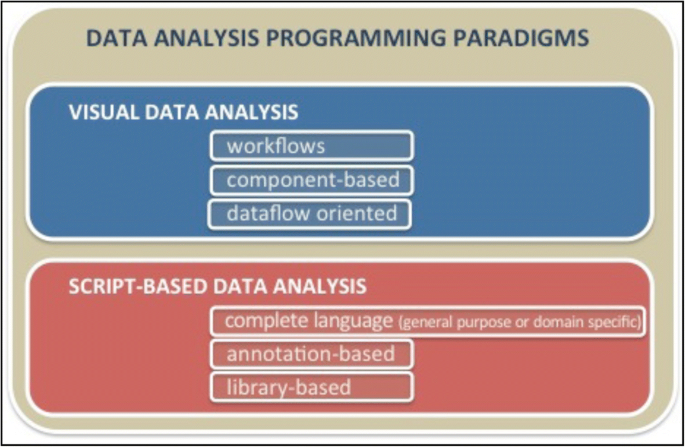

Data analysis applications implemented by some frameworks can be programmed through a visual interface, which is a convenient design approach for high-level users, for instance domain-expert analysts having a limited understanding of programming. In addition, a visual representation of workflows or components intrinsically captures parallelism at the task level, without the need to make parallelism explicit through control structures [14]. Visual-based data analysis typically is implemented by providing workflows-based languages or component-based paradigms (Fig. 3). Also dataflow-based approaches, that share with workflows the same application structure, are used. However, in dataflow models, the grain of parallelism and the size of data items are generally smaller with respect to workflows. In general, visual programming tools are not very flexible because they often implement a limited set of visual patterns and provide restricted manners to configure them. For addressing this issue, some visual languages provide users with the possibility to customize the behavior of patterns by adding code that can specify operations executed a specific pattern when an event occurs.

On the other hand, code-based (or script-based) formalism allows users to program complex applications more rapidly, in a more concise way, and with higher flexibility [16]. Script-based applications can be designed in different ways (see Fig. 3):

Given the variety of data analysis applications and classes of users (from skilled programmers to end users) that can be envisioned for future Exascale systems, there is a need for scalable programming models with different levels of abstractions (high-level and low-level) and different design formalisms (visual and script-based), according to the classification outlined above.

As we discussed, data-intensive applications are software programs that have a significant need to process large volumes of data [9]. Such applications devote most of their processing time to run I/O operations and exchanging and moving data among the processing elements of a parallel computing infrastructure. Parallel processing in data analysis applications typically involves accessing, pre-processing, partitioning, distributing, aggregating, querying, mining, and visualizing data that can be processed independently.

The main challenges for programming data analysis applications on Exascale computing systems come from potential scalability, network latency and reliability, reproducibility of data analysis, and resilience of mechanisms and operations offered to developers for accessing, exchanging and managing data. Indeed, processing very large data volumes requires operations and new algorithms able to scale in loading, storing, and processing massive amounts of data that generally must be partitioned in very small data grains, on which thousands to millions of simple parallel operations do analysis.

Exascale systems force new requirements on programming systems to target platforms with hundreds of homogeneous and heterogeneous cores. Evolutionary models have been recently proposed for Exascale programming that extend or adapt traditional parallel programming models like MPI (e.g., EPiGRAM [15] that uses a library-based approach, Open MPI for Exascale in the ECP initiative), OpenMP (e.g., OmpSs [8] that exploits an annotation-based approach, the SOLLVE project), and MapReduce (e.g., Pig Latin [22] that implements a domain-specific complete language). These new frameworks limit the communication overhead in message passing paradigms or limit the synchronization control if a shared-memory model is used [11].

As Exascale systems are likely to be based on large distributed memory hardware, MPI is one of the most natural programming systems. MPI is currently used on over about one million cores, therefore is reasonable to have MPI as one programming paradigm used on Exascale systems. The same possibility occurs for MapReduce-based libraries that today are run on very large HPC and cloud systems. Both these paradigms are largely used for implementing Big Data analysis applications. As expected, general MPI all-to-all communication does not scale well in Exascale environments, thus to solve this issue new MPI releases introduced neighbor collectives to support sparse “all-to-some” communication patterns that limit the data exchange on limited regions of processors [11].

Ensuring the reliability of Exascale systems requires a holistic approach including several hardware and software technologies for both predicting crashes and keeping systems stable despite failures. In the runtime of parallel APIs, like MPI and MapReduce-based libraries like Hadoop, if do not want to behave incorrectly in case of processor failure, a reliable communication layer must be provided using the lower unreliable layer by implementing a correct protocol that work safely with every implementation of the unreliable layer that cannot tolerate crashes of the processors on which it runs. Concerning MapReduce frameworks, reference [18] reports on an adaptive MapReduce framework, called P2P-MapReduce, which has been developed to manage node churn, master node failures, and job recovery in a decentralized way, so as to provide a more reliable MapReduce middleware that can be effectively exploited in dynamic large-scale infrastructures.

On the other hand, new complete languages such as X10 [29], ECL [33], UPC [21], Legion [3], and Chapel [4] have been defined by exploiting in them a data-centric approach. Furthermore, new APIs based on a revolutionary approach, such as GA [20] and SHMEM [19], have been implemented according to a library-based model. These novel parallel paradigms are devised to address the requirements of data processing using massive parallelism. In particular, languages such as X10, UPC, and Chapel and the GA library are based on a partitioned global address space (PGAS) memory model that is suited to implement data-intensive Exascale applications because it uses private data structures and limits the amount of shared data among parallel threads.

Together with different approaches, such as Pig Latin and ECL, those programming models, languages and APIs must be further investigated, designed and adapted for providing data-centric scalable programming models useful to support the reliable and effective implementation of Exascale data analysis applications composed of up to millions of computing units that process small data elements and exchange them with a very limited set of processing elements. PGAS-based models, data-flow and data-driven paradigms, local-data approaches today represent promising solutions that could be used for Exascale data analysis programming. The APGAS model is, for example, implemented in the X10 language where it is based on the notions of places and asynchrony. A place is an abstraction of shared, mutable data and worker threads operating on the data. A single APGAS computation can consist of hundreds or potentially tens of thousands of places. Asynchrony is implemented by a single block-structured control construct async. Given a statement ST, the construct async ST executes ST in a separate thread of control. Memory locations in one place can contain references to locations at other places. To compute upon data at another place, the

statement must be used. It allows the task to change its place of execution to p, executes ST at p and returns, leaving behind tasks that may have been spawned during the execution of ST.

Another interesting language based on the PGAS model is Chapel [4]. Its locality mechanisms can be effectively used for scalable data analysis where light data mining (sub-)tasks are run on local processing elements and partial results must be exchanged. Chapel data locality provides control over where data values are stored and where tasks execute so that developers can ensure parallel data analysis computations execute near the variables they access, or vice-versa for minimizing the communication and synchronization costs. For example, Chapel programmers can specify how domains and arrays are distributed amongst the system nodes. Another appealing feature in Chapel is the expression of synchronization in a data-centric style. By associating synchronization constructs with data (variables), locality is enforced and data-driven parallelism can be easily expressed also at very large scale. In Chapel, locales and domains are abstractions for referring to machine resources and map tasks and data to them. Locales are language abstractions for naming a portion of a target architecture (e.g., a GPU, a single core or a multicore node) that has processing and storage capabilities. A locale specifies where (on which processing node) to execute tasks/statements/operations. For example, in a system composed of 4 locales

$$ \mathbffor executing the method Filter(D) on the first locale, we can use

$$ \mathbfand to execute the K-means algorithm on the 4 locales we can use